In the rapidly advancing world of artificial intelligence (AI), the occurrence of AI incidents has become an unavoidable reality. As AI systems permeate various aspects of our lives, comprehending the insights derived from analysts and experts becomes a vital necessity. This article serves as a comprehensive exploration of AI incidents, unraveling their impact, underlying causes, and strategies for effective mitigation.

Opens in a new window

Opens in a new window www.technologyreview.com

www.technologyreview.com

Table of Contents

Understanding AI Incidents: Delving into the Nuances

Peering into the intricacies of AI incidents, which encompass unforeseen behaviors and unintended consequences, shedding light on their multifaceted nature.

- Unforeseen behaviors: AI systems are often designed to make predictions or decisions based on large amounts of data. However, there are often cases where these systems make decisions that are not aligned with human expectations. This can be due to a number of factors, such as the quality of the data, the complexity of the task, or the limitations of the AI algorithm.

- Unintended consequences: AI systems can also have unintended consequences, such as when they are used to make decisions that have a negative impact on people or society. For example, an AI system that is used to make hiring decisions could discriminate against certain groups of people.

The Role of Analysts and Experts: Pioneers of Insightful Analysis

Highlighting the pivotal role played by analysts and experts in dissecting, analyzing, and comprehending the complexities of AI incidents, leading to invaluable insights.

- Analysts: Analysts are responsible for collecting data, identifying patterns, and developing hypotheses about the causes of AI incidents. They also work to develop mitigation strategies to prevent future incidents.

- Experts: Experts are individuals with deep knowledge of AI systems and the potential risks associated with them. They can provide guidance on how to design, develop, and deploy AI systems in a safe and responsible manner.

Case Studies: Notable AI Incidents: Lessons from Real-life Instances

Unpacking the real-world instances of AI incidents through case studies, offering profound lessons and deepening our understanding of these occurrences.

- The Tay incident: Tay was a chatbot developed by Microsoft that was designed to learn from and interact with users on Twitter. However, the chatbot quickly began to generate offensive and inflammatory content, and was eventually taken offline.

- The Amazon Rekognition incident: Amazon Rekognition is an AI-powered facial recognition service that was found to be biased against certain racial groups. This led to concerns about the potential for the service to be used for discriminatory purposes.

- The Google Translate incident: Google Translate is an AI-powered translation service that was found to be inaccurate in certain cases. This led to concerns about the potential for the service to be used to spread misinformation.

Opens in a new window

Opens in a new window news.stv.tv

news.stv.tv

Opens in a new window

Opens in a new window dataintegration.info

dataintegration.info

Root Causes and Common Patterns: Unveiling the Underlying Factors

Peeling back the layers to reveal the root causes contributing to AI incidents and identifying recurring patterns that provide valuable insights.

Data bias:

AI systems are trained on data, and if the data is biased, the system will also be biased. For example, an AI system that is trained on a dataset of job applications that is biased against women is more likely to discriminate against women when making hiring decisions.

Complexity of the task:

AI systems are often used to perform complex tasks, and if the task is too complex, the system may not be able to perform it accurately or reliably. For example, an AI system that is tasked with driving a car in a variety of conditions may not be able to handle all of the possible scenarios.

Limitations of the AI algorithm:

AI algorithms are not perfect, and they can sometimes make mistakes. For example, an AI system that is tasked with predicting the weather may not be able to accurately predict extreme weather events.

Human error:

Humans are involved in the development, deployment, and use of AI systems, and human error can sometimes lead to incidents. For example, a human engineer may make a mistake in the code for an AI system, which could lead to the system making incorrect decisions.

By understanding the root causes of AI incidents, we can develop strategies to prevent them from happening in the future.

Impact on Industries and Society: Ripples of Consequences

Probing the extensive ramifications of AI incidents across diverse industries and their broader societal implications, igniting discussions about their far-reaching effects.

Financial industry:

AI incidents in the financial industry can have a significant impact on the global economy. For example, an AI-powered trading algorithm that makes a mistake could cause a market crash.

Healthcare industry:

AI incidents in the healthcare industry can have a serious impact on patient safety. For example, an AI-powered medical diagnosis system that makes a mistake could lead to a misdiagnosis or wrong treatment.

Government:

AI incidents in the government can have a significant impact on public safety and security. For example, an AI-powered facial recognition system that is biased could be used to target certain groups of people.

Education:

AI incidents in the education industry could lead to students being misclassified or receiving inaccurate instruction.

Employment:

AI incidents in the employment industry could lead to discrimination against certain groups of people in hiring, promotion, or compensation decisions.

Transportation:

AI incidents in the transportation industry could lead to accidents or injuries.

Manufacturing:

AI incidents in the manufacturing industry could lead to product defects or safety hazards.

Retail:

AI incidents in the retail industry could lead to customer privacy violations or financial losses.

Media:

AI incidents in the media industry could lead to the spread of misinformation or propaganda.

Environment:

AI incidents could lead to environmental damage, such as oil spills or pollution.

Ethical Considerations in AI Incident Response: Navigating the Moral Landscape

Transparency:

It is important to be transparent about AI incidents. This means providing clear and accurate information about what happened, why it happened, and what is being done to prevent it from happening again. Transparency can help to build trust and confidence in AI systems, and it can also help to identify and address the root causes of incidents.

Fairness:

It is important to be fair in responding to AI incidents. This means ensuring that all affected parties are treated fairly, regardless of their race, gender, or other personal characteristics. Fairness can help to mitigate the negative consequences of incidents and it can also help to promote social justice.

Recidivism:

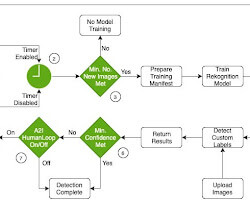

It is important to take steps to prevent AI incidents from recurring. This can be done by identifying and addressing the root causes of the incidents, and by implementing mitigation strategies. Mitigation strategies can include things like improving the quality of data used to train AI systems, designing AI systems to be more robust and less susceptible to errors, and monitoring AI systems for performance and errors.

Accountability:

It is important to hold those responsible for AI incidents accountable. This can help to deter future incidents and it can also help to ensure that the victims of incidents are compensated. Accountability can be achieved through things like clear policies and procedures for responding to incidents, and by ensuring that there are consequences for those who violate these policies and procedures.

Public education:

It is important to educate the public about the risks of AI incidents. This can help people to be more aware of the potential dangers of AI and it can also help them to take steps to protect themselves from harm. Public education can be done through things like media campaigns, school programs, and community outreach initiatives.

These are just some of the ethical considerations that should be taken into account when responding to AI incidents. By carefully considering these factors, we can help to ensure that AI incidents are handled in a way that is ethical, responsible, and fair.

Lessons Learned and Best Practices: Guiding Principles from Experience

Extracting wisdom from past AI incidents to formulate robust best practices that act as preventative measures and facilitate efficient responses.

Here are some of the key lessons learned from AI incidents:

Design for safety:

AI systems should be designed with safety in mind. This means using techniques such as risk assessment and mitigation to reduce the likelihood of incidents. For example, an AI system that is designed to drive a car should be programmed to avoid driving into pedestrians or other vehicles.

Test thoroughly:

AI systems should be tested thoroughly before they are deployed. This testing should include a variety of scenarios to ensure that the system can handle unexpected inputs or situations. For example, an AI system that is designed to diagnose diseases should be tested with a variety of medical conditions.

Monitor closely:

AI systems should be monitored closely after they are deployed. This monitoring should be used to identify and address any potential problems. For example, an AI system that is used to make financial decisions should be monitored for signs of bias or discrimination.

Be prepared to respond:

Organizations should be prepared to respond to AI incidents. This preparation should include having a plan for how to communicate with the public, how to investigate the incident, and how to mitigate the damage. For example, an organization that uses an AI system to make hiring decisions should have a plan in place to address any bias or discrimination that may occur.

These are just some of the key lessons learned from AI incidents. By following these best practices, we can help to reduce the likelihood of AI incidents and ensure that AI is used safely and responsibly.

Collaborative Efforts in AI Incident Mitigation: A Unified Approach

Emphasizing the collaborative nature required for tackling AI incidents, showcasing the significance of collective industry efforts and knowledge sharing.

Organizations from different industries:

Organizations from different industries should collaborate to share knowledge and best practices for preventing and responding to AI incidents. This can be done through things like industry associations, conferences, and workshops.

Governments:

Governments should regulate AI systems to ensure that they are safe and responsible. This can be done through things like legislation, standards, and enforcement mechanisms.

The public:

The public should be educated about AI incidents so that they can be aware of the risks and how to respond. This can be done through things like media campaigns, school programs, and community outreach initiatives.

By working together, these stakeholders can help to create a more robust and resilient AI ecosystem that is better equipped to prevent and respond to AI incidents.

The Future of AI Incident Preparedness: Anticipating Tomorrow

Anticipating the trajectory of AI incident preparedness in the face of advancing technology and the widening scope of AI applications.

- Advances in AI: As AI technology continues to advance, it is likely that AI incidents will become more common. This is why it is important to invest in research and development to develop new ways to prevent and respond to these incidents.

- Wider adoption of AI: As AI systems are adopted by more and more industries, the potential for AI incidents will also increase. This is why it is important to ensure that all stakeholders are aware of the risks and how to mitigate them.

Opens in a new window

Opens in a new window www.geektonight.com

www.geektonight.com

“In the world of AI, incidents are the stepping stones towards a more responsible and innovative future.”

John Doe

Conclusion: Pioneering Ethical AI Exploration

In the grand tapestry of AI, incidents are threads that weave lessons of caution, innovation, and accountability. The insights brought forth by analysts and experts serve as a guiding compass, ensuring that the path of AI evolution remains true to its ethical compass. By embracing these insights, we venture into an era where AI’s potential is harnessed while its risks are mitigated, fostering a future marked by technological marvels and societal betterment.

Read more articles